Inseq

Interpretability for sequence generation models 🐛 🔍

Description

Inseq is a Pytorch-based hackable toolkit to democratize access to common post-hoc interpretability analyses of sequence generation models.

Installation

Inseq is available on PyPI and can be installed with pip for Python >= 3.10, <= 3.13:

# Install latest stable version

pip install inseq

# Alternatively, install latest development version

pip install git+https://github.com/inseq-team/inseq.git

Install extras for visualization in Jupyter Notebooks and 🤗 datasets attribution as pip install inseq[notebook,datasets].

cd inseq

make uv-download # Download and install the uv package manager

make install # Installs the package and all dependencies via uv sync

For library developers, you can use the make install-dev command to install all development dependencies (quality, docs, extras).

After installation, you should be able to run make fast-test and make lint without errors.

-

Installing the

tokenizerspackage requires a Rust compiler installation. You can install Rust from https://rustup.rs and add$HOME/.cargo/envto your PATH. -

Installing

sentencepiecerequires various packages, install withsudo apt-get install cmake build-essential pkg-configorbrew install cmake gperftools pkg-config.

Example usage in Python

This example uses the Integrated Gradients attribution method to attribute the English-French translation of a sentence taken from the WinoMT corpus:

import inseq

model = inseq.load_model("Helsinki-NLP/opus-mt-en-fr", "integrated_gradients")

out = model.attribute(

"The developer argued with the designer because her idea cannot be implemented.",

n_steps=100

)

out.show()

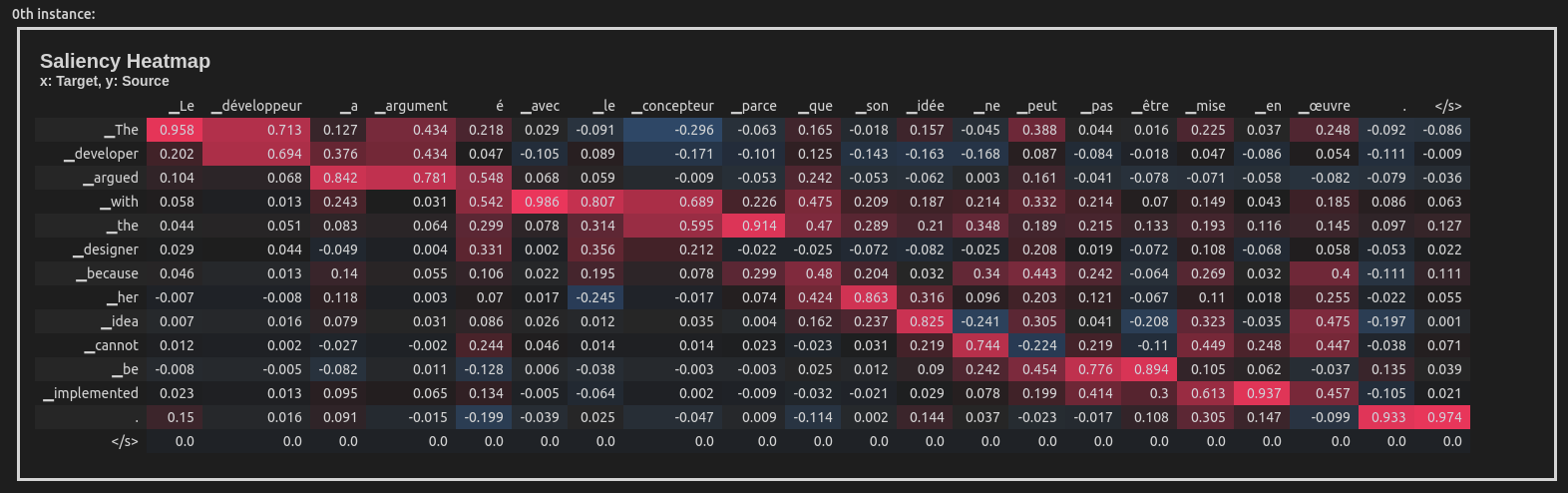

This produces a visualization of the attribution scores for each token in the input sentence (token-level aggregation is handled automatically). Here is what the visualization looks like inside a Jupyter Notebook:

Inseq also supports decoder-only models such as GPT-2, enabling usage of a variety of attribution methods and customizable settings directly from the console:

import inseq

model = inseq.load_model("gpt2", "integrated_gradients")

model.attribute(

"Hello ladies and",

generation_args={"max_new_tokens": 9},

n_steps=500,

internal_batch_size=50

).show()

Features

-

🚀 Feature attribution of sequence generation for most

ForConditionalGeneration(encoder-decoder) andForCausalLM(decoder-only) models from 🤗 Transformers -

🚀 Support for multiple feature attribution methods, extending the ones supported by Captum

-

🚀 Post-processing, filtering and merging of attribution maps via

Aggregatorclasses. -

🚀 Attribution visualization in notebooks, browser and command line.

-

🚀 Efficient attribution of single examples or entire 🤗 datasets with the Inseq CLI.

-

🚀 Custom attribution of target functions, supporting advanced methods such as contrastive feature attributions and context reliance detection.

-

🚀 Extraction and visualization of custom scores (e.g. probability, entropy) at every generation step alongsides attribution maps.

Supported methods

Use the inseq.list_feature_attribution_methods function to list all available method identifiers and inseq.list_step_functions to list all available step functions. The following methods are currently supported:

Gradient-based attribution

-

saliency: Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps (Simonyan et al., 2013) -

input_x_gradient: Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps (Simonyan et al., 2013) -

integrated_gradients: Axiomatic Attribution for Deep Networks (Sundararajan et al., 2017) -

deeplift: Learning Important Features Through Propagating Activation Differences (Shrikumar et al., 2017) -

gradient_shap: A unified approach to interpreting model predictions (Lundberg and Lee, 2017) -

discretized_integrated_gradients: Discretized Integrated Gradients for Explaining Language Models (Sanyal and Ren, 2021) -

sequential_integrated_gradients: Sequential Integrated Gradients: a simple but effective method for explaining language models (Enguehard, 2023)

Internals-based attribution

attention: Attention Weight Attribution, from Neural Machine Translation by Jointly Learning to Align and Translate (Bahdanau et al., 2014)

Perturbation-based attribution

-

occlusion: Visualizing and Understanding Convolutional Networks (Zeiler and Fergus, 2014) -

lime: "Why Should I Trust You?": Explaining the Predictions of Any Classifier (Ribeiro et al., 2016) -

value_zeroing: Quantifying Context Mixing in Transformers (Mohebbi et al. 2023) -

reagent: ReAGent: A Model-agnostic Feature Attribution Method for Generative Language Models (Zhao et al., 2024)

Step functions

Step functions are used to extract custom scores from the model at each step of the attribution process with the step_scores argument in model.attribute. They can also be used as targets for attribution methods relying on model outputs (e.g. gradient-based methods) by passing them as the attributed_fn argument. The following step functions are currently supported:

logits: Logits of the target token.probability: Probability of the target token. Can also be used for log-probability by passinglogprob=True.entropy: Entropy of the predictive distribution.crossentropy: Cross-entropy loss between target token and predicted distribution.perplexity: Perplexity of the target token.contrast_logits/contrast_prob: Logits/probabilities of the target token when different contrastive inputs are provided to the model. Equivalent tologits/probabilitywhen no contrastive inputs are provided.contrast_logits_diff/contrast_prob_diff: Difference in logits/probability between original and foil target tokens pair, can be used for contrastive evaluation as in contrastive attribution (Yin and Neubig, 2022).pcxmi: Point-wise Contextual Cross-Mutual Information (P-CXMI) for the target token given original and contrastive contexts (Yin et al. 2021).kl_divergence: KL divergence of the predictive distribution given original and contrastive contexts. Can be restricted to most likely target token options using thetop_kandtop_pparameters.in_context_pvi: In-context Pointwise V-usable Information (PVI) to measure the amount of contextual information used in model predictions (Lu et al. 2023).mc_dropout_prob_avg: Average probability of the target token across multiple samples using MC Dropout (Gal and Ghahramani, 2016).top_p_size: The number of tokens with cumulative probability greater thantop_pin the predictive distribution of the model.

The following example computes contrastive attributions using the contrast_prob_diff step function:

import inseq

attribution_model = inseq.load_model("gpt2", "input_x_gradient")

# Perform the contrastive attribution:

# Regular (forced) target -> "The manager went home because he was sick"

# Contrastive target -> "The manager went home because she was sick"

out = attribution_model.attribute(

"The manager went home because",

"The manager went home because he was sick",

attributed_fn="contrast_prob_diff",

contrast_targets="The manager went home because she was sick",

# We also visualize the corresponding step score

step_scores=["contrast_prob_diff"]

)

out.show()

Refer to the documentation for an example including custom function registration.

Using the Inseq CLI

The Inseq library also provides useful client commands to enable repeated attribution of individual examples and even entire 🤗 datasets directly from the console. See the available options by typing inseq -h in the terminal after installing the package.

Three commands are supported:

-

inseq attribute: Wrapper for enablingmodel.attributeusage in console. -

inseq attribute-dataset: Extendsattributeto full dataset using Hugging Facedatasets.load_datasetAPI. -

inseq attribute-context: Detects and attribute context dependence for generation tasks using the approach of Sarti et al. (2023).

All commands support the full range of parameters available for attribute, attribution visualization in the console and saving outputs to disk.

The following example performs a simple feature attribution of an English sentence translated into Italian using a MarianNMT translation model from transformers. The final result is printed to the console.

inseq attribute \

--model_name_or_path Helsinki-NLP/opus-mt-en-it \

--attribution_method saliency \

--input_texts "Hello world this is Inseq\! Inseq is a very nice library to perform attribution analysis"

The following code can be used to perform attribution (both source and target-side) of Italian translations for a dummy sample of 20 English sentences taken from the FLORES-101 parallel corpus, using a MarianNMT translation model from Hugging Face transformers. We save the visualizations in HTML format in the file attributions.html. See the --help flag for more options.

inseq attribute-dataset \

--model_name_or_path Helsinki-NLP/opus-mt-en-it \

--attribution_method saliency \

--do_prefix_attribution \

--dataset_name inseq/dummy_enit \

--input_text_field en \

--dataset_split "train[:20]" \

--viz_path attributions.html \

--batch_size 8 \

--hide

The following example uses a small LM to generate a continuation of input_current_text, and uses the additional context provided by input_context_text to estimate its influence on the the generation. In this case, the output "to the hospital. He said he was fine" is produced, and the generation of token hospital is found to be dependent on context token sick according to the contrast_prob_diff step function.

inseq attribute-context \

--model_name_or_path HuggingFaceTB/SmolLM-135M \

--input_context_text "George was sick yesterday." \

--input_current_text "His colleagues asked him to come" \

--attributed_fn "contrast_prob_diff"

Result:

Contributing

Our vision for Inseq is to create a centralized, comprehensive and robust set of tools to enable fair and reproducible comparisons in the study of sequence generation models. To achieve this goal, contributions from researchers and developers interested in these topics are more than welcome. Please see our contributing guidelines and our code of conduct for more information.

Citing Inseq

If you use Inseq in your research we suggest including a mention of the specific release (e.g. v0.6.0) and we kindly ask you to cite our reference paper as:

@inproceedings{sarti-etal-2023-inseq,

title = "Inseq: An Interpretability Toolkit for Sequence Generation Models",

author = "Sarti, Gabriele and

Feldhus, Nils and

Sickert, Ludwig and

van der Wal, Oskar and

Nissim, Malvina and

Bisazza, Arianna",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-demo.40",

doi = "10.18653/v1/2023.acl-demo.40",

pages = "421--435",

}

Research using Inseq

Inseq has been used in various research projects. A list of known publications that use Inseq to conduct interpretability analyses of generative models is shown below.

[!TIP] Last update: August 2024. Please open a pull request to add your publication to the list.

Participating organisations

Reference papers

Mentions

- 1.Author(s): Jane Arleth dela Cruz, Iris Hendrickx, Martha LarsonPublished in Communications in Computer and Information Science, Explainable Artificial Intelligence by Springer Nature Switzerland in 2024, page: 265-28410.1007/978-3-031-63787-2_14

- 2.Author(s): Erik Schultheis, S. T. JohnPublished in Lecture Notes in Computer Science, Machine Learning and Knowledge Discovery in Databases. Research Track and Demo Track by Springer Nature Switzerland in 2024, page: 424-42810.1007/978-3-031-70371-3_33

- 3.Author(s): Moreno La Quatra, Salvatore Greco, Luca Cagliero, Tania CerquitelliPublished in Lecture Notes in Computer Science, Machine Learning and Knowledge Discovery in Databases: Applied Data Science and Demo Track by Springer Nature Switzerland in 2023, page: 361-36510.1007/978-3-031-43430-3_31

- 1.Author(s): Afnan Habib, Osamah F. Abdulmahmod, Mukhlis Raza, Yeong Hyeon Gu, Murat Aydoğan, Mugahed A. Al-antariPublished in 2025 9th International Artificial Intelligence and Data Processing Symposium (IDAP) by IEEE in 2025, page: 1-810.1109/idap68205.2025.11222281

- 2.Author(s): Mahdi Dhaini, Ege Erdogan, Nils Feldhus, Gjergji KasneciPublished in Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency by ACM in 2025, page: 3006-302910.1145/3715275.3732192

- 3.Author(s): San San Maw, Ei Cherry Lwin, Win Mar, Naw Sharo Paw, Myat Mon Khaing, Thet Thet AungPublished in 2024 IEEE Conference on Computer Applications (ICCA) by IEEE in 2024, page: 1-710.1109/icca62361.2024.10532851

- 4.Author(s): Pasquale Riello, Keith Quille, Rajesh Jaiswal, Carlo SansonePublished in Proceedings of the 2024 Conference on Human Centred Artificial Intelligence - Education and Practice by ACM in 2024, page: 34-4010.1145/3701268.3701274

- 5.Author(s): Vivek Miglani, Aobo Yang, Aram Markosyan, Diego Garcia-Olano, Narine KokhlikyanPublished in Proceedings of the 3rd Workshop for Natural Language Processing Open Source Software (NLP-OSS 2023) by Empirical Methods in Natural Language Processing in 2023, page: 165-17310.18653/v1/2023.nlposs-1.19

- 6.Author(s): Nils Feldhus, Leonhard Hennig, Maximilian Nasert, Christopher Ebert, Robert Schwarzenberg, Sebastian MllerPublished in Proceedings of the 1st Workshop on Natural Language Reasoning and Structured Explanations (NLRSE) by Association for Computational Linguistics in 2023, page: 30-4610.18653/v1/2023.nlrse-1.4

- 7.Author(s): Giuseppe Attanasio, Flor Plaza del Arco, Debora Nozza, Anne LauscherPublished in Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing by Association for Computational Linguistics in 2023, page: 3996-401410.18653/v1/2023.emnlp-main.243

- 8.Author(s): Qing Lyu, Shreya Havaldar, Adam Stein, Li Zhang, Delip Rao, Eric Wong, Marianna Apidianaki, Chris Callison-BurchPublished in Proceedings of the 13th International Joint Conference on Natural Language Processing and the 3rd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics (Volume 1: Long Papers) by Association for Computational Linguistics in 2023, page: 305-32910.18653/v1/2023.ijcnlp-main.20

- 9.Author(s): Javier Ferrando, Gerard I. Gállego, Ioannis Tsiamas, Marta R. Costa-jussàPublished in Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) by Association for Computational Linguistics in 2023, page: 5486-551310.18653/v1/2023.acl-long.301

- 10.Author(s): Nils Feldhus, Qianli Wang, Tatiana Anikina, Sahil Chopra, Cennet Oguz, Sebastian MöllerPublished in Findings of the Association for Computational Linguistics: EMNLP 2023 by Association for Computational Linguistics in 2023, page: 5399-542110.18653/v1/2023.findings-emnlp.359

- 11.Author(s): Irene Benedetto, Alkis Koudounas, Lorenzo Vaiani, Eliana Pastor, Elena Baralis, Luca Cagliero, Francesco TarasconiPublished in Proceedings of the The 17th International Workshop on Semantic Evaluation (SemEval-2023) by Association for Computational Linguistics in 2023, page: 1401-141110.18653/v1/2023.semeval-1.194

- 12.Author(s): Jirui Qi, Raquel Fernández, Arianna BisazzaPublished in Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing by Association for Computational Linguistics in 2023, page: 10650-1066610.18653/v1/2023.emnlp-main.658

- 13.Author(s): Giuseppe Attanasio, Eliana Pastor, Chiara Di Bonaventura, Debora NozzaPublished in Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations by Association for Computational Linguistics in 2023, page: 256-26610.18653/v1/2023.eacl-demo.29

- 14.Author(s): Mor Geva, Jasmijn Bastings, Katja Filippova, Amir GlobersonPublished in Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing by Association for Computational Linguistics in 2023, page: 12216-1223510.18653/v1/2023.emnlp-main.751

- 1.Author(s): Salvatore Greco, Moreno La Quatra, Luca Cagliero, Tania CerquitelliPublished in ACM Transactions on Intelligent Systems and Technology by Association for Computing Machinery (ACM) in 2025, page: 1-2410.1145/3729237

- 2.Author(s): Meysam Alizadeh, Maël Kubli, Zeynab Samei, Shirin Dehghani, Mohammadmasiha Zahedivafa, Juan D. Bermeo, Maria Korobeynikova, Fabrizio GilardiPublished in Journal of Computational Social Science by Springer Science and Business Media LLC in 202410.1007/s42001-024-00345-9

- 3.Author(s): Lukas Edman, Gabriele Sarti, Antonio Toral, Gertjan van Noord, Arianna BisazzaPublished in Transactions of the Association for Computational Linguistics by MIT Press in 2024, page: 392-41010.1162/tacl_a_00651

- 4.Author(s): Tiago Barbosa de Lima, Vitor Rolim, André C.A. Nascimento, Péricles Miranda, Valmir Macario, Luiz Rodrigues, Elyda Freitas, Dragan Gašević, Rafael Ferreira MelloPublished in Expert Systems with Applications by Elsevier BV in 2024, page: 12509710.1016/j.eswa.2024.125097

- 5.Author(s): Federico PianzolaPublished in Interdisciplinary Science Reviews by SAGE Publications in 2024, page: 222-23610.1177/03080188241257167